· Tutorials · 2 min read

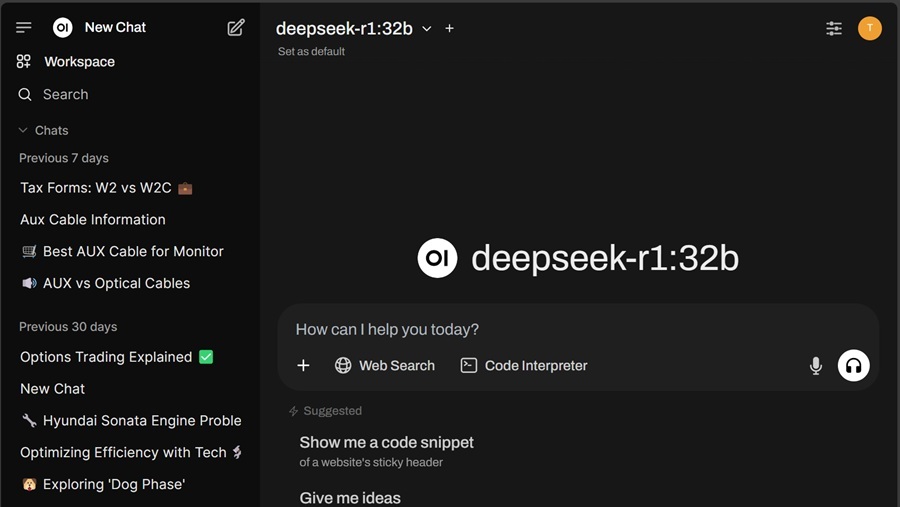

Privately Chat with DeepSeek R1 on Windows in 5 Minutes

Run DeepSeek R1 AI privately on your Windows PC in 5 minutes with Ollama. Chat locally without sending data to external servers.

In our historic AI race, we have reached the point where AIs are taking AI jobs. OpenAI and other big players in the AI race are scrambling to defeat their new threat, Open Source LLMs.

For us, Open Source LLMs are a good thing. It means anyone can make their own “ChatGPT at home”. One notable one, and what likely brought you here, is DeepSeek R1.

Open Source LLMs are not new, but this LLM is different. It was trained in a unique way that allows the models to be run on a larger range of lower-end systems unlike its counterparts. You are likely able to run this on your system and here’s how to do it with 100% privacy.

Download Ollama

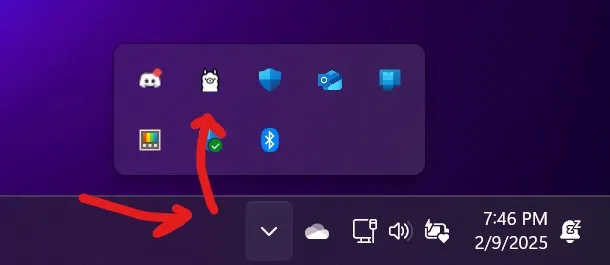

Visit https://ollama.com/download/windows and install the Windows version. Once installed, this program runs in the background.

If you have installed it successfully, you will see Ollama on your System Tray:

Note: If you don’t see it, run it manually like any other program.

What is Ollama?

Ollama is a platform that allows you to download LLM models and run them locally on your system privately. It doesn’t have a GUI, so we have to use our terminal to interact with it.

Download DeepSeek R1

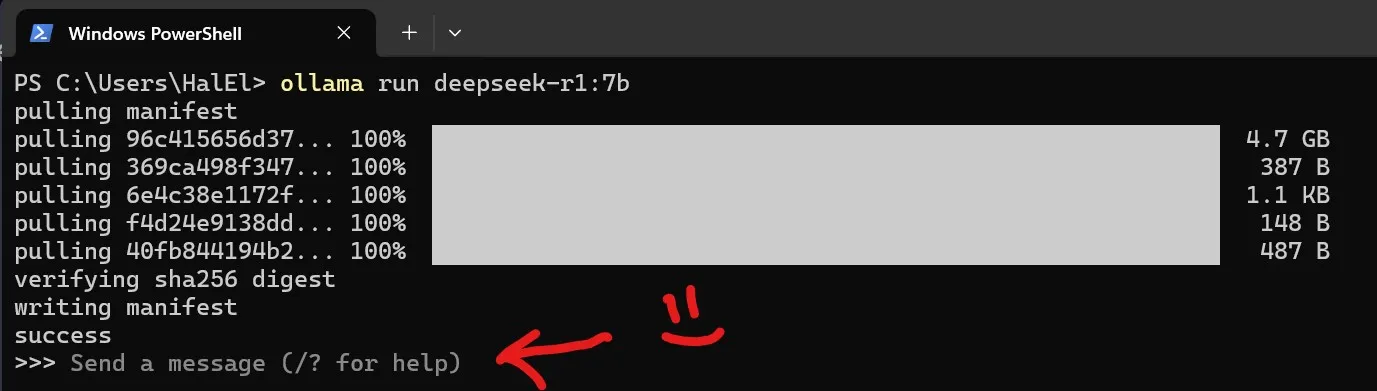

To get started, we need to open either Command Prompt or PowerShell.

We have one command to run! This will download and run the model when it’s ready.

ollama run deepseek-r1:7bIf you see “Send a message (/? for help)” in your terminal, DeepSeek R1 is waiting for you.

Once at this point, you can start chatting! 😁

To finish chatting, type /bye to exit out.

If you feel like this model is not so bright, there are smarter versions if your system can support it.

Smarter/Weaker Versions of DeepSeek R1 are available

You can download smarter or weaker versions of it by updating the command:

A list of different versions can be found here: https://ollama.com/library/deepseek-r1:7b

Here is an example of running the weakest version:

ollama run deepseek-r1:1.5bIssues

- Error about system memory? - Use a weaker version.

- Warning about using the CPU? - It will just run slower. If you can, run it on a system with a Graphics Card (GPU).

Chat Away!

You are still here? At this point, you have privately run your own AI LLM now. You could brainstorm making Jarvis. 😁