· Tutorials · 3 min read

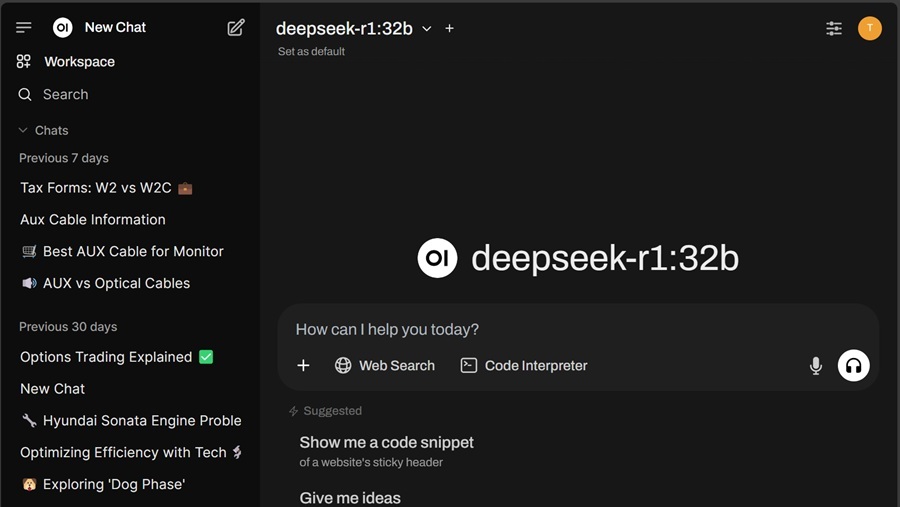

WebUI Solution: Chat Privately With Your Local AI

Set up OpenChat with Ollama for a fully private local AI experience.

Ever wish you could host a private ChatGPT alternative on your own machine? OpenChat (Open WebUI) provides an easy, fully offline setup—especially if you’ve already installed Ollama for local AI inference.

Prerequisites

- Ollama

If you need help installing Ollama, we covered installing Ollama in our Privately Chat with DeepSeek R1 on Windows in 5 Minutes post.

What is OpenChat?

OpenChat is a self-hosted AI platform that integrates with Ollama to offer a complete offline chat experience, including features like local RAG, voice/video chat, and Markdown—all while keeping your data on your PC.

How To Install OpenChat

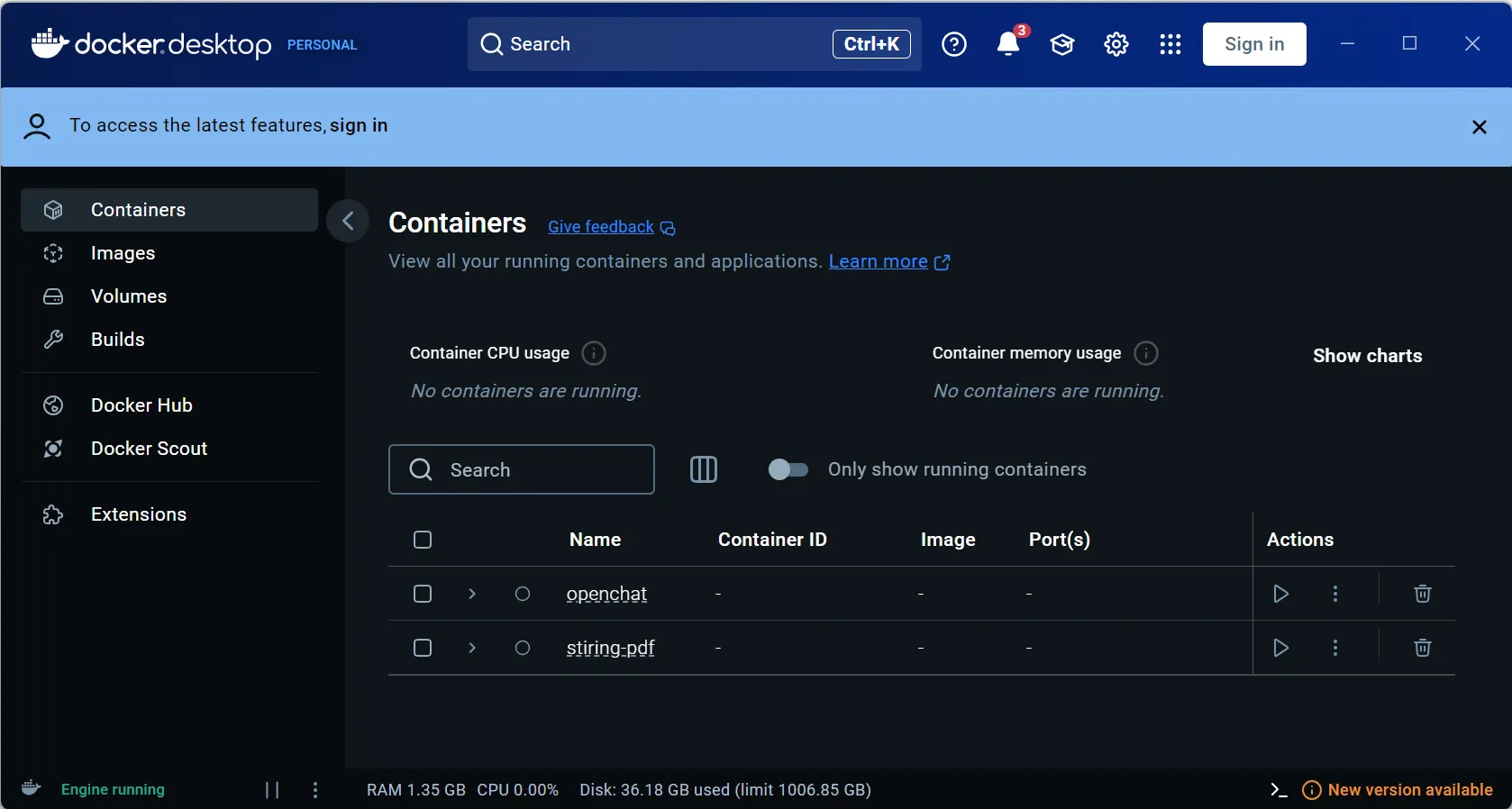

Step 1: Install Docker

- Visit https://www.docker.com/products/docker-desktop

- Download and install Docker Desktop for your OS.

- Launch Docker Desktop and ensure it’s running.

What is Docker?

Docker helps you package and run apps in isolated containers so it’s easier to handle dependencies and environments.

Step 2: Create a Docker Compose Config File

- Create a folder and add a file named docker-compose.yml.

- Copy this into the docker-compose.yml file:

services:

open-webui:

image: ghcr.io/open-webui/open-webui:ollama

container_name: open-webui

restart: always

ports:

- '3000:8080'

volumes:

- open-webui:/app/backend/data

volumes:

open-webui:

driver: localWhat is Docker Compose?

Docker Compose allows you to define and run multiple containers from a single YAML file. It simplifies spinning everything up with one command.

Step 3: Use Docker Compose to handle the rest

- Open your terminal (CMD, PowerShell, Bash, etc).

- Navigate to your folder:

cd path\to\your\docker-compose-folder - Then run the Docker Compose file:

This pulls the image and launches the OpenChat server locally.docker compose up - Visit http://localhost:3000 and start chatting.

- To stop and remove the container, run:

This setup helps you quickly deploy a local GPT-like environment using Docker, ensuring your conversations never leave your machine.docker compose down

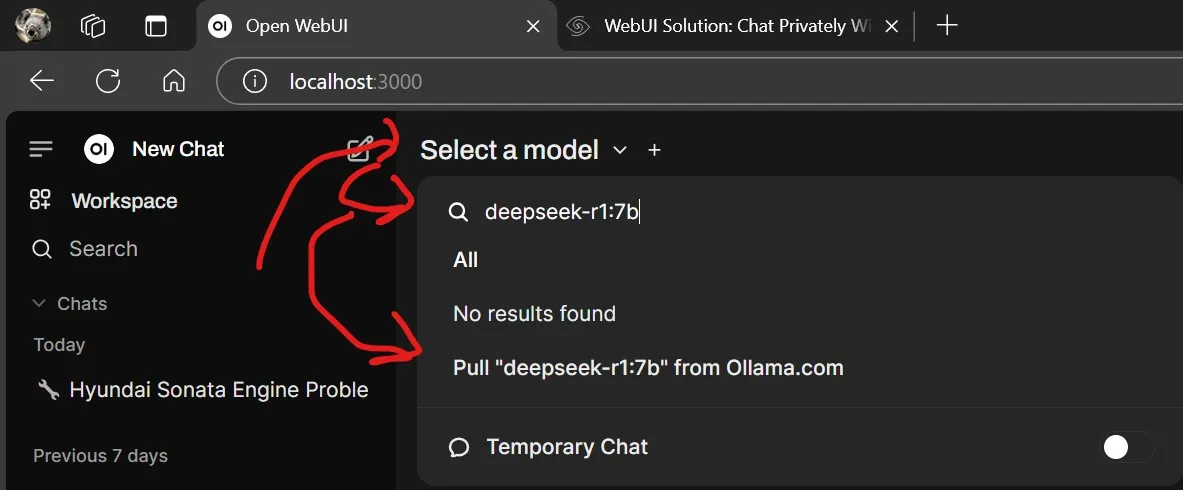

Try Downloading a new model

In this example, we will install DeepSeek R1 and there are two ways to download a model:

The Fastest way

Note: This is broken right now, but it may still be worth a try

- Go to the Model Selector in Open WebUI.

- Enter “deepseek-r1:7b” (or another variant).

- If the model isn’t found, OpenChat will prompt you to download it via Ollama.

- Once downloaded, select it in the Model Selector.

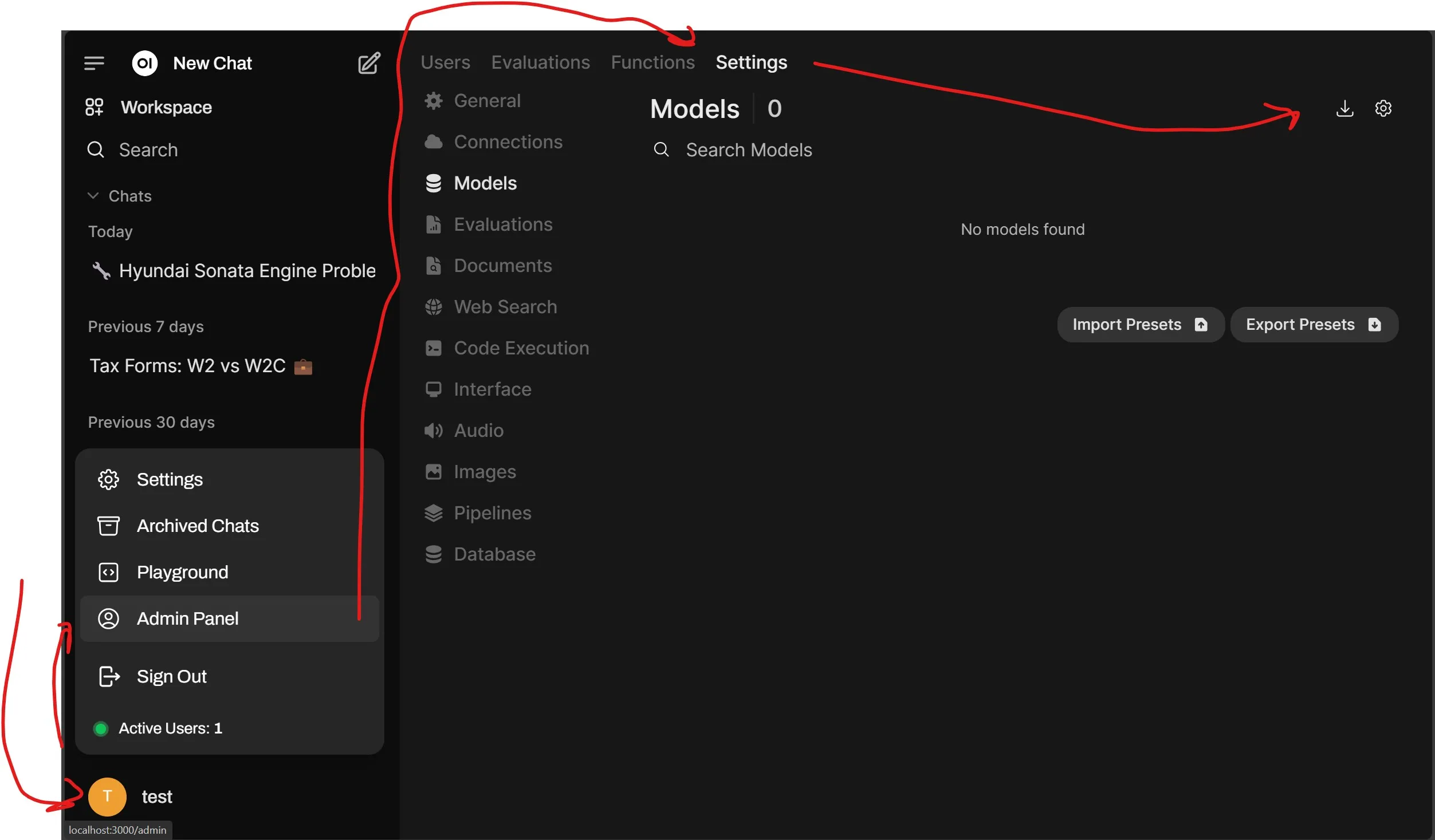

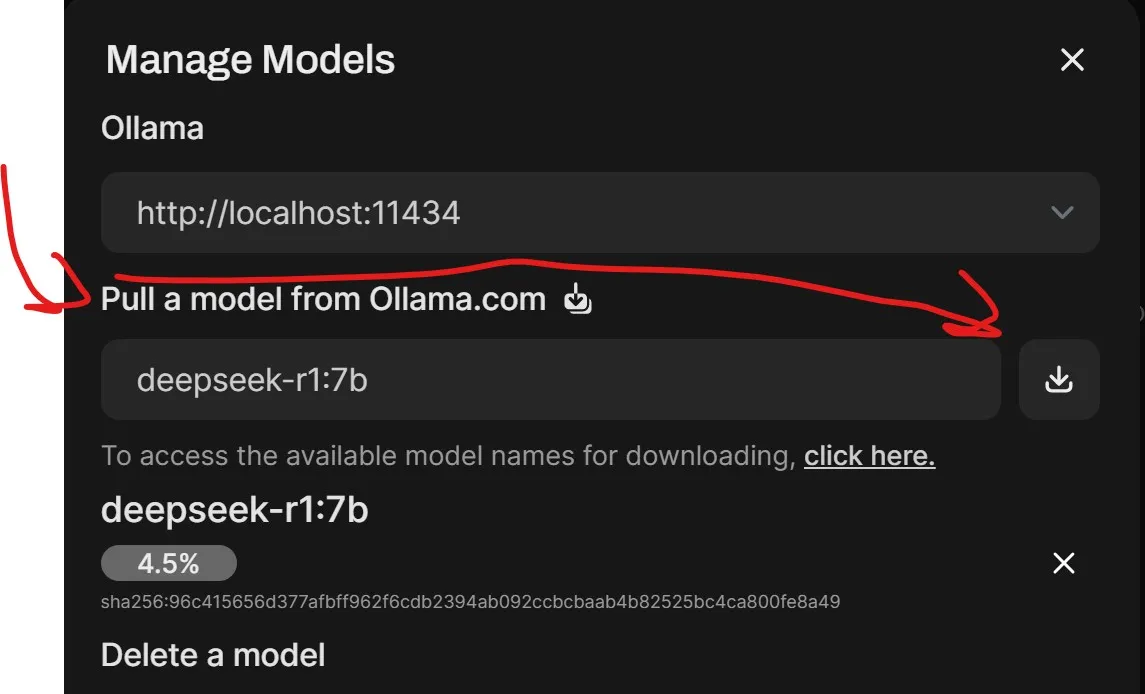

The Admin way

- Click on your profile.

- Click on “Admin Panel” button.

- Click on “Settings” tab.

- Click on the “Download Icon” button.

- Under “Pull a model from Ollama.com”, enter “deepseek-r1:7b” (or another variant).

- Click on the “Download Icon” button.

- Wait for the download to complete.

Enjoy Private AI

With OpenChat, you get a powerful, offline LLM solution on your own hardware—perfect for private GPT-like usage or secure on-premise AI projects. Check out the docs for more tips!